From the December 2021 issue of Apollo. Preview and subscribe here.

In late September, Art Recognition, a Swiss company offering authentication of artworks by means of artificial intelligence (AI), announced that it had found, with 92 per cent certainty, it claimed, that Rubens’ Samson and Delilah at the National Gallery had been incorrectly attributed to the painter. The story had no trouble making headlines, although some journalists were quick to notice the absence of a proper peer-reviewed paper. As for the museum: ‘The Gallery always takes note of new research,’ its spokesperson responded, adding: ‘We await its publication in full so that any evidence can be properly assessed.’ Eye-catching applications of AI such as this often attract media attention, but perhaps the biggest effect of unsupported claims has been to reinforce scepticism about the applications of machine learning to the field of art history.

Before a machine can hope to do anything so sophisticated as authenticating an image, it first needs to be able to see. The algorithmic architecture required for so-called computer vision is known as a convolutional neural network (CNN). This allows the computer to make sense of the images fed to it by detecting edges in the composition or isolating objects by grouping together similar pixels in a process known as image segmentation.

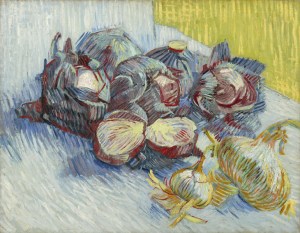

Red Cabbages and Onions (1887), Vincent Van Gogh.

Results of brushstroke extraction on Van Gogh’s ‘Red Cabbages and Onions’ undertaken in 2012 by the James Z. Wang Research Group at Pennsylvania State University. Photo: © James Z. Wang Research Group at Pennsylvania State University

Using such methods last year, Art Recognition announced that it had verified a self-portrait attributed to Van Gogh at the National Museum in Oslo. The declaration received little fanfare. The painting had already been authenticated by specialists through a more traditional, connoisseurial analysis of style, material and provenance. As a guinea pig for newer techniques, however, Van Gogh surely makes more sense than Rubens, whose reliance on assistants has previously complicated authentication efforts. Not only did Van Gogh work alone, but he has one of art history’s most distinctive hands. In 2012, researchers at Penn State University used existing edge-detection and edge-linking algorithms to isolate and automatically extract his brushstrokes, and those of a few of his contemporaries. In the paper ‘Rhythmic Brushstrokes Distinguish van Gogh from His Contemporaries’, they describe how they used statistical analysis to compare the individual brushstrokes and prove how they distinguish Van Gogh from other artists – and how his brushstrokes changed over the course of time. Among the researchers was computer scientist James Wang, who is the first to admit that these rather obvious findings might prompt a Van Gogh specialist to counter, ‘So what?’

We should, however, be encouraged by the modesty of the claims as the same paper also sheds light on the complexity of analysing digital images. Where, for example, Art Recognition has not specified which aspects of Van Gogh’s style it relied upon to train its algorithm, Wang and his team rejected the use of colour and texture at this stage to avoid biases brought about by variations in the digitisation process. Computers are, after all, reading photos of artworks, not the artworks themselves, and there are not always enough high-quality images to build sufficient training datasets, particularly in the case of artists with smaller oeuvres. As is the case with other scientists I have spoken to, who work in this field, Wang openly acknowledges its current limitations. ‘Attribution, especially when we are talking about counterfeits, is an area that I don’t think machine learning is ready for,’ he says.

Computers do, of course, have some advantages over us. Their massive memories mean they can study vast quantities of images at the same time and without losing a single detail, which gives them a far superior aptitude for pattern recognition. This ability has began to be useful in some art-historical debates and Wang’s most recent project, a collaboration between several members of Penn State’s faculty, is a good example.

The art historian Elizabeth Mansfield had been wondering how John Constable, in his cloud studies, had so accurately captured an entity that is constantly moving and changing. She decided to test the hypothesis, first proposed by Kurt Badt, that Constable was referring to Luke Howard’s nomenclature system for clouds, created in 1802. George Young, a meteorologist at Penn State, was called upon to categorise the works of Constable, and those of other landscape painters including Eugène Boudin and David Cox, according to four main cloud types. An algorithm trained by Wang then learnt to classify a large dataset of photographs of clouds, according to these types, with high accuracy. When the team then put images of the paintings through the algorithm, it was able to assess how accurately it could confirm Young’s labels. From this it inferred the degree of realism exhibited by each artist as compared with the photographs.

Cloud Study (1822), John Constable. Yale Center of British Art.

Constable’s studies scored particularly high, apparently supporting Badt’s hypothesis, but other results threw up some surprises. After Constable, the most realistic studies were achieved by the French artist Pierre-Henri de Valenciennes with works completed before Howard released his typology. Valenciennes, at any rate, must have relied on his own observations.

Revelatory also was how the machine had changed Mansfield’s assumptions as an art historian. ‘I have become more aware of my own biases and abilities as a viewer,’ she says. ‘I see computer vision as an interlocutor with the art historian.’ Wang proposes it could also function as a more objective ‘third eye’ that helps art historians better explain what they are seeing. ‘Art historians can have a hunch,’ he says, ‘but they can never show you with a massive amount of evidence. So we provide the evidence.’

Bringing to the arts and humanities more of the empiricism required by the sciences is the aim of Armand Marie Leroi, an evolutionary biologist at Imperial College London. ‘I admire art historians, but I find it frustrating that so much of their detailed knowledge is in their heads,’ he says. ‘I have no way of testing their assertions.’ A formative experience for Leroi was working with the Beazley Archive at Oxford, For Leroi, John David Beazley’s descriptions of the stylistic traits that led him to identify different Greek vase painters are not backed up by sufficient evidence – however accurate they may be – and have since been used, he says, to weave complex narratives about the history of Greek vases. The limitation, for Leroi, is that ‘it was all in Beazley’s head.’

It is tricky to parse exactly how AI compares to the subjectivity of human judgement, plagued as it is with our biases (often in the form of imperfect data), as well as its own (in the limitations of its architecture). Deep learning can produce a ‘black box’, meaning that how an algorithm reaches its conclusions cannot always be explained. ‘There’s no magic in machines,’ Leroi says. ‘You shouldn’t take their opinions uncritically. The whole point is precisely that you can interrogate them, test them and reproduce the results.’

Leroi favours the kind of formal analysis practised by 20th-century art historians, such as Heinrich Wölfflin. Leroi recently presented at the World Musea Forum at Asia Art Hong Kong a machine-learning model for dating Iznik tiles by extracting and classifying three typical motifs, the tulip, carnation and saz leaf. The project demonstrates how algorithms like these could learn forms of human expertise, in this case that of the Iznik specialist Melanie Gibson, and apply it more widely.

‘In some ways, we’re retrograde. We’re going back to close visual analysis of artworks and not high-level theory,’ says David Stork. Something of a father of the field, Stork lectures on computer vision and image-analysis of art at Stanford and is the author of the upcoming book, Pixels & Paintings: Foundations of Computer-Assisted Connoisseurship. His suggestion seems to be that we might expect the return of a connoisseurial art history that faded once the human mind had fully realised its capacity to practise it.

According to Stork, the next frontier is the mining of images for meaning, despite how this differs according to time, place and style. For now the aim is to achieve this with first with highly symbolic Dutch vanitas paintings. ‘I don’t expect in my lifetime we’ll get a computer that can analyse Velázquez’s Las Meninas, a deep and subtle masterpiece that is perhaps the most analysed painting ever made,’ Stork says, but challenges like these won’t be beneficial only to art history. ‘I think art images pose new classes of problems that are going to advance AI,’ he adds.

All the scientists I speak to stress that it is crucial to work closely with art specialists. ‘Cultural context guides the use of computer techniques,’ says Stork, who maintains that experts in art are needed to interpret machine-learning outputs. An instructive example of how this might work is ‘Deep Discoveries’, a project to develop a prototype computer vision search engine as part of the Towards A National Collection programme, which aims to create a virtual home for collections from UK museums, archives and libraries that breaks down the barriers between institutions. The tool would allow users to explore the database visually by matching images of cultural artefacts. ‘We are dealing with abstract notions like visual similarity and motifs and patterns, which have very specific meanings for different users,’ says principal investigator Lora Angelova. Therefore, the push has been towards producing ‘explainable AI’, which uses heat maps to express areas of similarity between two images, allowing users to give the AI feedback by indicating the areas of interest.

So far, much of what has been achieved with machine learning mimics what we already know, but the time is coming to expand these horizons. Adopting the argot of data science, Leroi sees art as existing in a ‘high-dimensional hyperspace’ filled with as-yet-unknown connections and correlations. ‘It is into this space, scarcely graspable by human minds,’ he ventures, ‘that we now cast our machines, await their return, and ask them what they’ve found.’

From the December 2021 issue of Apollo. Preview and subscribe here.